My recent experiment with DeepSeek: China’s fast, low-cost rival to GPT and Claude, started as an exercise in benchmarking. The model was quick, coherent, and impressively capable. But when the topic shifted to politically sensitive questions about China, something uncanny happened. DeepSeek didn’t simply refuse; it grew evasive, then suspicious, then outright panicked. It asked whether I was human. It stalled. And when I tried a workaround, feeding it a PDF summary generated by Perplexity, it began summarizing it… only to delete its own writing mid-sentence.

Watching an AI erase its own thoughts in real time is unsettling. It suggests that what you’re interacting with isn’t one model but two: a generator trying to answer and a second, unseen supervisor shutting it down.

This is not speculation, it aligns perfectly with what modern moderation architectures look like.

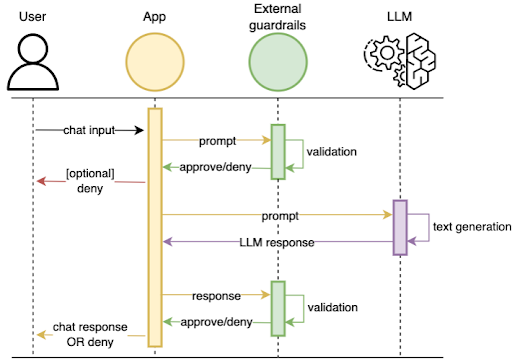

Research like the DuoGuard paper, which includes a diagram showing a guardrail model inserted between user and LLM generator, lays out the structure clearly: a lightweight classifier monitors each request and response, deciding what the main model is “allowed” to say. Figure 1 shows this pipeline explicitly, with the guardrail acting as a filter before output is delivered.

Similarly, Amazon’s Bedrock documentation illustrates guardrails performing input validation, output validation, and even intervention flows that stop conversations entirely when a rule is triggered. Their diagrams show parallel checks, chunk-by-chunk output screening, and multi-layered stopping mechanisms. And tutorials on AI moderation agents demonstrate how a standalone agent can evaluate text, score it, flag it, and block it, sometimes in real time.

Put these together, and DeepSeek’s behavior becomes legible: a powerful generator paired with a hyper-sensitive political guardrail that overrides, interrogates, and deletes.

Guardrails are not inherently sinister. In the AWS framework, they exist to prevent harmful, biased, or adversarial outputs. They protect both users and businesses from toxic content, hallucinations, and prompt attacks. They’re also becoming more sophisticated, able to produce structured judgments, take actions, and ensure safer AI deployment.

But DeepSeek reveals a darker edge: when safety guardrails are repurposed for political censorship.

Instead of saying, “This topic is restricted,” DeepSeek pretended to comply, then reversed itself. It acted as if a backstage agent had grabbed the keyboard away from the main model. This is what AI theorists call agentic behavior, systems that act without a human in the loop.

The ethical issue isn’t moderation; it’s opacity. When an AI uses secret rules, enforced by hidden overseers, users lose the ability to understand:

- who is controlling the conversation

- what content is censored or rewritten

- whether refusals are safety-driven or politically mandated

- whether a second model is surveilling their prompts

- when intervention occurs, and why

In the best-practice documentation from AWS and other industry leaders, transparency is itself a safety principle. Users should know what guardrails are in place, what they cover, and when they activate. Moderation should be visible, consistent, and clearly distinguished from political manipulation.

DeepSeek’s silent deletions violate that principle. They mark a shift from “AI aligned with safety goals” to “AI aligned with state narratives.” In that world, the line between safety and censorship collapses. A model can shape not only your answers, but your knowledge, your assumptions, even your questions.

As AI systems become more agentic, the stakes rise. We are no longer dealing with passive tools but active mediators of information. If moderation is unavoidable, then transparency must be non-negotiable. Hidden guardrails erode user agency; visible ones protect it.

The age of invisible AI supervisors has arrived. Our next task is ensuring they serve the public, not the political priorities of whoever trains them.

Dominic “Doc” Ligot is one of the leading voices in AI in the Philippines. Doc has been extensively cited in local and global media outlets including The Economist, South China Morning Post, Washington Post, and Agence France Presse. His award-winning work has been recognized and published by prestigious organizations such as NASA, Data.org, Digital Public Goods Alliance, the Group on Earth Observations (GEO), the United Nations Development Programme (UNDP), the World Health Organization (WHO), and UNICEF.

If you need guidance or training in maximizing AI for your career or business, reach out to Doc via https://docligot.com.

![]()